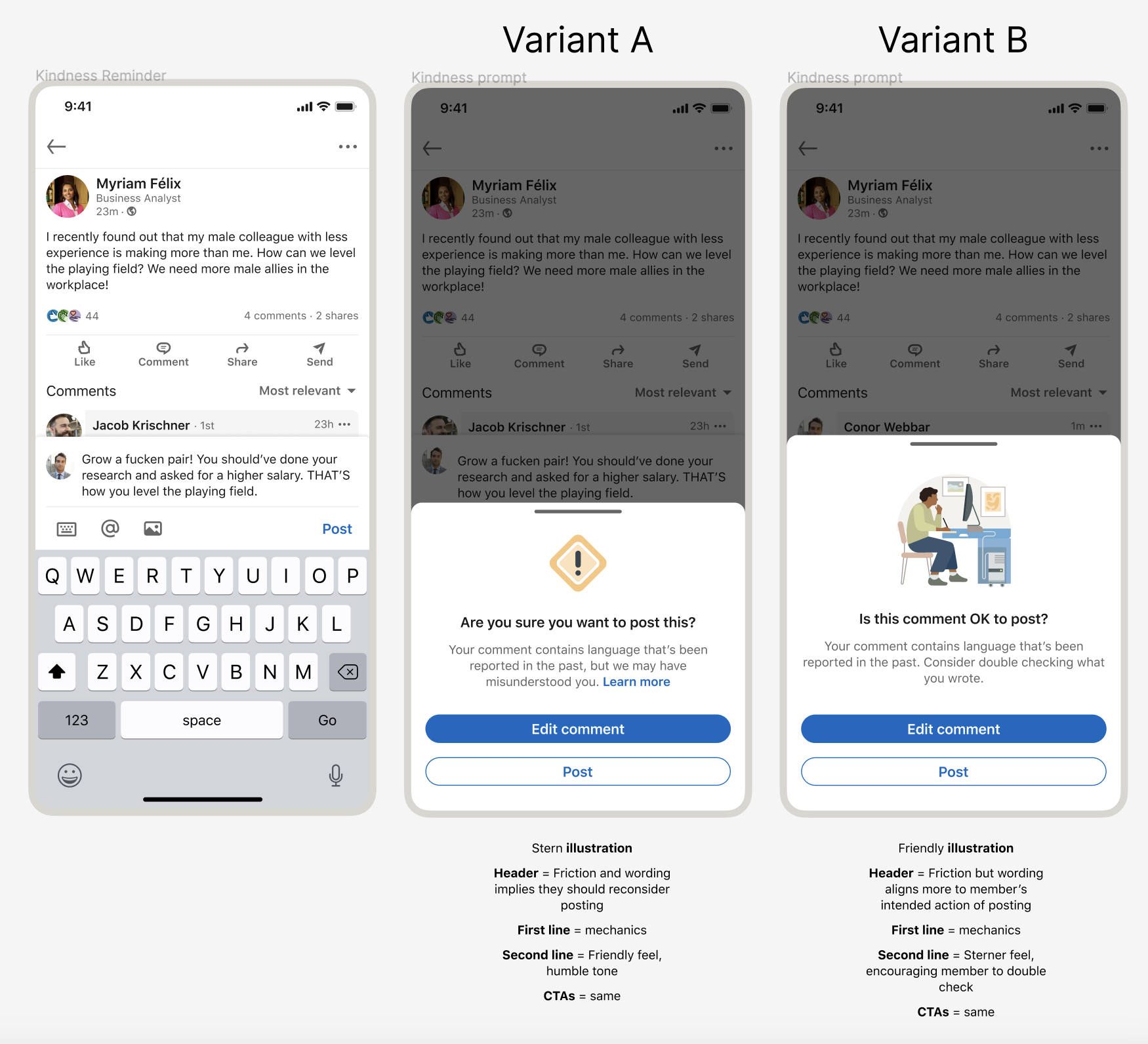

Kindness reminders

The Problem

The LinkedIn ecosystem rewarded comments with the most engagement by lifting them to the top of the conversation. However, these comments were prone to being inflammatory and combative in nature and by giving them more visibility, we were exacerbating the problem. Unwanted comments impact the wellbeing of the person posting, and reduces their trust in LinkedIn as a safe space to discuss ideas or build credibility for their professonal brand. For a person seeking valuable conversations, combative comments erode their perception of LinkedIn as a place for professional engagement.

The solution

One part of our product strategy to address low-quality comments was to address the problem at the highest point in the comment lifecycle (creation) by reminding members to keep their commentary professional when we detect problematic language.

My role

Before settling on this solution, I participated in the team’s early brainstorming of pain points and possible solutions when it comes to comments. We narrowed down the ideas we wanted to put in front of members in UXR, and I helped craft copy for the prototypes. I actively took part in foundational UXR including note-taking and taking part in debriefs and analysis.

From this UXR, Kindness Reminders rose to the top as a team priority. I started by doing a roundup of all past related work and explorations. This proved to be an invaluable resource for the story-telling and socializing of this feature with leadership, and helped us avoid past mistakes.

I worked closely with design, research, and product counterparts to develop variants for UXR. We decided not to state the triggering words in the modal to discourage “playing the system.”

I helped the research team develop content design questions.

Along the way, we learned that our MVP method for detecting unwanted comments had a roughly 40% false-positive rate. Until we moved to the next phase of detection engineering, we needed new copy that balanced both scenarios — correctly and incorrectly triggered words. I updated copy for in-product A/B testing, varying the tone and messaging hierarchy.

I also helped worked closely with a product designer to design the flow for an exploratory generative AI feature, in which a commenter with low-quality content can edit their comment with the help of generated suggestions.

Outcome

We saw a statistically significant reduction in unique commenters posting target words. We also saw that around 12% of commenters, when presented with a kindness reminder, opted to not post their comment at all.